We'll try reloading the data with COPY and then re-export it with UNLOAD using the cast to double precision that you mentioned. ~6 weeks, because we're on the trailing track. We will see some of the ways of data import into the Redshift cluster from S3 bucket as well as data export from Redshift to an S3 bucket. See the following code: unload select from edw.

#Redshift unload parquet how to

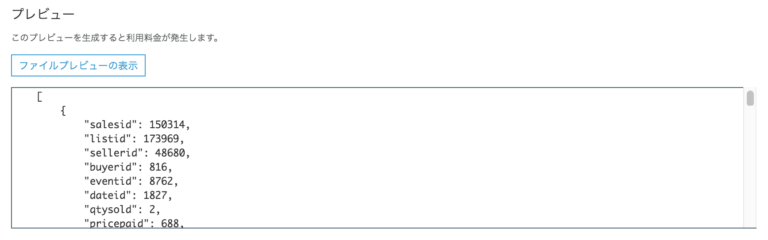

So, we'll have to wait 2 release cycles, i.e. 1285 DecemIn this article, we are going to learn about Amazon Redshift and how to work with CSV files. You can accomplish this by scheduling an UNLOAD command to run daily to export data from the table to the data lake on Amazon S3. You have AWS CLI installed and configured to use with your AWS account. Because bucket names are unique across AWS accounts, replace eltblogpost with your unique bucket name as applicable in the sample code provided. We can unload Redshift data to S3 in Parquet format directly. You have an existing Amazon S3 bucket named eltblogpost in your data lake to store unloaded data from Amazon Redshift.

As of December 2019 Redshift now supports the Parquet format in the UNLOAD command. 2 Answers Sorted by: 4 Spark is not needed anymore. However, we're trying to get this fixed sooner than we'll the bug fix which in this thread seems like it may be in the next release cycle's current track release. Currently the implementation of UNLOAD throws an exception if it receives anything but CSV for the export format. You can see this coming ahead of time in what the Redshift events are telling you when you clusters will get patched, 2026 events that contain the words "system maintenance" mean an OS patch, and 2025 events that contain the words "database maintenence" mean Redshift software version upgrade.

#Redshift unload parquet Patch

The last release cycle that we just got in us-east-1 this week (on the trailing track) included an OS patch too. That is as long as the patch with the fix doesn't span an OS patch as well. Contact Informatica Support to get the EBF. For version 10.2.2 ServicePack 1, an emergency bug fix (EBF-14484) is also available to include this feature. You're probably correct about being able to get Redshift to downgrade a cluster to a version that has the bug. Solution In Enterprise Data Catalog (EDC) 10.2.2 HotFix 1, multiple Unload Options property can be passed for the Redshift Profile scanner in EDC 10.2.2 HotFix 1.

0 kommentar(er)

0 kommentar(er)